devices.

Photonicat

I got a photonicat. I am still playing around, but it seems to work as a 5G access point. The one thing is I have to leave it for a bit for the 5G to actually start. I have installed tailscale on it, but no other poking.

quake shake.

I also set up a Quake shake. Will see if we have any earthquakes :).

More agents.

I hacked away a little on my little agent program. It now has Readline history and more logging. I have found it to be better than cortex and claude code because you don’t need a subscription. It also has no safety, which is a mixed bag at best. However, it did write the default single page theme for this blog.

It is amazing how much you can get done with only 10 quid worth of tokens.

Agent, Voices, Voice agents.

First I worked through You Should Write An Agent, then I rewrote it in golang with more tools. It is amazing how powerful and simple this is. I gave it psql access, and it can debug Postgres. I gave it nmap and shell access, and it can start finding network vulnerabilities. This is pretty great.

I also tried setting up a realtime voice agent doing talkie toaster. This is also pretty great, and its much more responsive than other voice agents. However, it needs some tool calling to be useful.

Nanochat

I tried out Nanochat. It was a good learning exercise, but what was interesting was finding a GPU provider. I ended up on Jarvis labs. Its pretty different from any other cloud provider, likely due to the GPU cost dominating. There’s no network costs, cpu costs, etc. You also pay in advance. It feels very different from normal compute - there’s a constant pressure not to waste the money.

Bluesky bots

I peered at this post. I suspect it is about right, but wanted to check. It turns out it is easy to use the Jetstream to get a corpus of posts. With a corpus, I can find bots by finding identical posts, urls etc.

I suspect there is a bias. On one hand, bots probably worsen the user experience. On the other, they help boost daily active users, posts, and so on.

talkie toaster

I made a talkie toaster, based on this. It was mostly written by claude, with some careful tuning of silence and making responses faster. It is every bit as annoying as you might expect.

Thames path

We walked the Thames Path from Hampton court palace to the Woolwich Arsenal. It was a lovely walk, but I didn’t keep notes.

Robot cam

Robot cam

One problem we have is the robot lawn mower crashing into the football goal. The over the top solution to this I have set up is:

-

Have cameras pointing at the lawn. We had one of these already, I added a 2nd.

-

Write a program that takes a photo through these cameras and sends it to an LLM to detect if there is the goal in the picture.

-

Have home assistant trigger this program an hour before sunset.

-

Only start the mower if the lawn is clear.

Will see how it gets on.

Tablet

Tablet

I have wanted a home display tablet for automation. I have been following this guide. Stuff I have done:

- locked down display with fully kiosk.

- Set up various monitors, including the wind and rain sensors on the house.

- Set up motion detection

- set up charging and stop charging automation.

two way doorbell

The goal is to have a popup on the tablet that can show who is at the gate, and to have a way to talk to them and open the gate.

I did it in a random order, but I would suggest:

-

Get go2rtc working behind SSL. You need this for webrtc testing. I did this with caddy as a reverse proxy.

-

Set up the doorbell as a stream in go2rtc. I have a fragment like below

streams: gate: - rtsp://admin:changme@192.168.1.6:554/h264Preview_01_sub - ffmpeg:test_doorbell#audio=opus#audio=copyYou can test this in go2rtc, including talking to the doorbell.

-

Set up browser-mod. This lets you pop up a window on the tablet.

-

Then I followed 2-way-audio-intercom-for-reolink-doorbell-made-easy to actually get set up.

I tweaked a few things - changing it to have a gate icon, changing it to turn on the tablet, etc.

One problem I have not yet fixed is that webRTC starts muted. This isn’t ideal. I think this is to do with chrome autoplay settings

Beara way

I walked the Beara way a couple weeks back. I found it a big tough trip, but well worth doing. Tough soles have it as one of their favorite trails.

I walked it in a counter clockwise direction, starting and ending in Glengarriff. I attempted to camp as much as possible, only staying indoors for 2 nights. My thinking was that if I was going to need to take the tent, I might as well get as much use out of it as possible.

This is a trail where you need boots.

Day 0

I drove down to Glengarriff on the hottest day of the year. This had the nicest weather and I was sorry to spend in a car. The one question I had was where to leave the car for the week. I ended up leaving it in Casey’s hotel car park. I asked them first, and had stayed there. Glengarriff is a nice little town. I did not find a campsite in Glengarriff itself.

Day 1 Glengarriff to Lauragh

This was a big day (40k) and fair tired me out. If I was to do it again, I would look at more options splitting this day up - perhaps going to Kenmare or going to Tuoist.

Looking back on Glengarriff

There are two stretches on the N71 - coming out of Glengarriff to the nature reserve, and later around Bonane. Both of these lack pavements, but both of these were actually fine for traffic.

A lot of the day (20k+) is on boreens. These are fine for walking in general, very quiet etc. However, in Drombane every house has a dog, resulting in much barking as I strolled along.

This is a very scenic day. There are the trees of Glengarriff nature reserve, then views back down to the south, and later in Urgh and beyond. It is steep and boggy around Feorus East. This was also the first encounter with cows, which resulted in a long, off trail diversion. There is a stone circle in Urgh, and the valley is pretty.

I camped at Creveen Lodge campsite, was very nice. I got the tent up just before it started to pour down.

There is a wine bar in Lauragh that looks very nice and does food, would suggest it for dinner.

Day 2 Lauragh to Eyeries

This starts on the road out of Lauragh, but this is very quiet. The path turns off the road and becomes muddy and craggy. There are fine views. I was going slow due to being tired from the previous day. I had hoped to get lunch in the pub in Ardgoom, but no lunch, just a pint. I bought some lunch from the spar. After Ardgoom, there is a nice part near the coast, then later I got lost in the bracken. The final part into Eyeries is along the coast and is very nice. I was fairly foot sore at this point. There was no dinner in Eyeries, but I did go to the pub. There was no campsite (which I knew ahead of time) and so I had booked a b&b.

.

.

Day 3: Eyeries to Alihies

I had picked up a limp, but made reasonable progress despite it. The route goes over a pass to Alihies, but first it bears left, up the hill and along. It is pretty pleasant. I didn’t realize until day 5 that it comes quite close to the route to CastletownBere. I saw butterflies close to the sea. The road down from the pass is long, and has a branch off to the sea. I was pretty tired and didn’t know where the branch would turn out. I would take it if doing the walk again. The route down runs past the old mine.

.

.

I stayed in the campsite in Alihies for two nights, and ate at the pub for two nights. The pub food is great. There is also a shop.

Mine tower

Day 4: Dursey Island

I left the tent up and walked from Alihies to Dursey Island via the cable car. This was a miserable day for the weather, with the clouds down and covering most of the island. I didn’t get all the way to the end of the Island, getting as far as the Erie sign. The walk out is very nice, and would be nicer in good weather. There are several nice beaches.

I went to the fish and chip van at the cable car, but its not great. I took the bus back to Alihies.

View of Dursey Island (taken previous day)

Can just see the tower I got to.

Day 5: Castletownbere.

I had worried about this day, having been fair whacked and limping from the first couple of days. However, it went fine. There is a long slow pull over the hill to the other side of the peninsula, on a very quiet road. The top was in cloud, and I found myself in a coilte forest (the first plantation of this trip - such a change from the Sieve blooms or Wicklow). I expected a slow amble down to Castletownbere, but the route turns right back up the hill again. This have great views back to Eyeries and to the north. I popped up Miskish for the view.

Looking back

.

.

Once I had seen the view, there is a long slow desent over the bog and boreen to Castletownbere. The town has everything, but I only stopped for a late lunch and a postcard. There is a stone circle before the town. However, when I got to it there were people meditating in it. I walked out of castledown bere to the golf club, where I camped for two nights.

You can camp on Bere Island. If I had known this I would have camped there. Camping at the golf course was fine, however, you need one euro coins for the shower and I didn’t have any. There was no one around when I was there.

Golf course camping

The sentry box is left over from WW2.

.

.

Day 6: Bere Island

I didn’t do the full loop of the island. I took the boat from the pontoon next to the campsite. Its worth checking when the ferry goes, because I ended up waiting an hour. I walked out to the fort at the east end, then up to the Martello tower. It was a lovely day in the sun, and in general a rest before the next two days.

In the bay there was the LÉ William Butler Yeats which was a surprise - it is rare to see the DF out.

out to sea

Day 7: Adrigole.

I awoke to rain and the knowledge this was a big day. I headed up towards Castletownbere to pick up the trail, then spent all day in the rain and mist. Lots of this in in the bog, boots etc were needed. There was also more cow dodging. Crossing the open rock was actually fine. This day has a long bit on the road at the end, but the road is very wide and everyone gives you space.

I stayed in the hungry hill campsite. I had the last of the camping food, but I didn’t need to bother. There is both a shop a little up the road and a onsite burger van. During the night a bird got into my last packet of oakcakes. This is a great campsite, would recommend.

Day 8: back to Glengarriff

This day opened with a short section on the road, but the road is both busy and narrow. This was the only section of road that I felt was unsafe. However, the trail quickly turned off, and became tracks and quiet roads again.

The bulk of this day is spent passing Nareera. This was all in the bog and rain for me, resulting in standing at a post, looking into the mist to try to spot the next post. However, with the bag as light as it was going to be, this was not too hard. There is a long slow descent into Coomarkone, and then road to the top of the Glengariff woods. I had pizza when done :).

file server

I finally finished fixing up my old file server. It now has 4x 8T drives (and an SSD), giving it 22T of raid5. I have moved frigate’s recordings onto it, as well as some backups, using 6T. One problem is that the NFS performance seems bad, I need to work on this.

NUC tower

I 3d printed a NUC Tower, put the inserts in, and assembled it. The results are pretty good!.

Hugo

My little jekyll install started failing. I spent a little time being confused

by ruby, and decided to start using

hugo instead. Switch over

complete. The hard part was poking the CSS and the content to make it look ok. I

found the most basic possible Hugo

site useful.

Glenmalure

Today I walked the circuit of Glenmalure.

- Start of Drumoff Forest car park

- Carrawaystick mount access route to Carrawaystick

- Up corrigasleggaun

- Up Lugnaquillia

- Along to Cameabologue

- Along to Table Mountain.

- Past the three lakes to Convalla (but not to the top)

- Up lugduff.

- Down to the high point of the Wicklow way

- Down the Wicklow way past the Mullacor hut to Coolalingo bridge.

- Back up the Wickow way to the car park.

I’m exhausted, but it has been an excellent day.

Bluray wtf

I decided to try to find a director’s commentary for a film. I thought it might be on the Bluray, so I bought both the disk and the drive. Notes here are:

- OSX doesn’t support bluray at all. You can buy software to play disks.

- VLC will support bluray, on both linux and OSX, but, most bluray’s are copy protected. There is various dubious bits of software to decrypt the disks, however, key revocation is built into both the disks and drive. Using a new disk revokes some access.

MakeMKVwill copy a disk (including defeating copy protection) for both linux and OSX. The resulting files are ~40G.

I was just amazed at how bad the whole experience is.

Sieve Bloom Way

I walked the Slieve bloom way. I took 2 days, but would suggest doing it in three. I had lovely weather on the first day, and pretty good on the 2nd. I started in Clonaslee and went anti-clockwise. Some points:

- A lot of it is through commercial forestry, like many Irish hiking trails. Lots of clear cutting.

- A lot of trees were down, likely from storm Éowyn. The photo below is over the forest track I was supposed to take.

-

It feels more remote than other Irish trails, there are no villages or shops apart from when starting. You do pass through Cadamstown, but there isn’t much there. This might be changing. Kinitty castle hotel is marked as having a cafe, and Coillte has a toliet and planning permission for a cafe in Castleconnor.

-

There’s a lot of new MTB trails in the area. I didn’t see many bikers, but it was not the weekend.

-

I mostly used Open Street Map and the online maps for nav. The trail is not directly on OSM. I also took the OSI sheet, but the trail has been moved north to go through Kinnitty forest since my OSI sheet was printed. This change is an improvement, I think.

-

I met other folks - some people on horseback, other hikers, and a couple chaps doing trail maintenance, but no one else doing the trail.

wet sensors

I have been worried a leak, so have setting up wet sensors around the house. The first of these didn’t work wet at all. They didn’t detect a real leak, and also didn’t detect when put on a plate of water. I bought a few more of a different model, will see if they do any better.

Photo search

Have downloaded 100s of GB of photos, I wanted to write my own photos search function. The approach I used is:

- Use a LLM to generate descriptions of all photos.

- Use reverse geocoding to map each photo to a location via the EXIF data

- Scan through the LLM output to find matches for the search term.

I didn’t really have any good ideas around ranking, and it doesn’t seem to matter in the results.

LLM wise, I started out with Google Gemini. Based on some testing I thought I would need 50-100 quid for describing every photo, so I moved on to using local AI. This seems OK, and after a week or two had described every photo. Some photos are too large for Local AI, so I fell back to using Gemini for them.

For photo locations I used the Google maps API. This turned out to be really easy to use and to get an address. I reverse geolocated all photos into text files on disk.

This actually is only ~200Mb of data, so reading through it all on request was pretty fast. I found that parsing the location data JSON in each photo location felt slow, so again I precomputed just the addresses, which made processing requests faster. There’s speedups in parallelisation etc, but I haven’t done those yet.

I used Claude to help with writing code, which was great.

Home gallery

I set up Home gallery for my vast collection of photos. So far its going great. I used icloud photo downloader to get all photos. So far its going OK.

Winter hiking

There’s been snow here, and I went walking.

Maulin

I went up Maulin as the snow fell, resulting in near whiteout conditions at the top. More photos.

Sugarloaf

Later in the week I went up the Sugarloaf, up the new path. More photos

Djouce

The third trip in the snow was the circuit around Djouce, War hill, Tonduff, and then up Maulin again. Lots of time in soft snow, night was starting to fall when I got down. All photos

All this was done with fgallery.

power monitoring

I’ve been setting up power monitoring for home. One device is a frient electrcity meter, which is reporting to home assistant. A second class of device is three smart plugs to report on likely suspects. Will see how we get on.

Jellyfin and internal caddy

First, I set up Jellyfin. Then I decided I wanted to access it over SSL, so I set up Caddy. I have wanted one big caddy setup, so I Set up Caddy similar to the external setup, with it setting DNS names and getting certs. Two interesting points:

-

I needed to set GCP DNS names. Since this isn’t a GCE VM, I ended up passing the path to my GCP creds in a systemd environment variable. Works so far.

-

Caddy dymanic DNS doesn’t support the

192.168.1.0/24address space. I ended up adding a hack.

Why not use the external caddy? I wanted to avoid the extra bandwidth usage.

git deploy

I wanted to be able to deploy this blog via git, onto a little Caddy server. Approach

- have a bare git repo to push to

git clone --bare $repo

- Have a

hooks/post-updatein that repo that rebuilds the site

export GIT_WORK_TREE=/home/psn/homelab

git checkout -f main

cd /home/psn/homelab

./copy.sh

This git checkout -f comes from these

docs.

-

Have the

copy.shrebuild the site with jekyll and copy it to a new dir. -

Fix up permissions in the directory.

-

Have a symlink in the

caddyfileconfig, change what the symlink is pointing to. -

delete the older copy. I only delete copies older than 1 day.

All I need to do is to push to the bare repo.

I also looked at having caddy change root with the caddy API. Below snippet

looks promising, however I would need to patch one of several routes, which

did not appeal.

curl localhost:2020/config/apps | jq | less

piholemon

I set up Pi Hole exporter to see how much gets blocked. So far 5k a day.

weather sensor again

Following up from previous post, I have put the wind sensor back up. The PI that provides data is now netbooting and providing data to prometheus. I have a grafana console of the weather, and alerts if no data. Getting there.

frigate

I haven’t made much posting recently, but have added a few things to frigate:

-

a Homekit camera, mounted on the side of the house. This I disconnected from homekit and paired with home assistant, then set up frigate to pull from home assistant’s go2rtc.

-

Prometheus monitoring and an offline alert.

netboot

OK, so the weather sensor stopped working after the SD card died. Previously, I reduced the write rate to the card. This time, I decided to netboot the pi. The doc I used.

One twist is that initramfs started failing, causing dpkg to fail. I set

/etc/initramfs-tools/initramfs.conf to include netboot, which fixed one issue.

The other problem is that storm bert blew the wind sensor off the shed, and I haven’t put it back up yet.

More monitoring

One of my machines ran out of disk due to a scraper during the week, so I finally set up:

- email alerting

- having alerts on disk space per machine

- having some alerts on services not running.

- having some probing.

Hopefully all will improve. I found this a useful collection of prom rules.

External Caddy

I had been wanting a external end point for a few services. I had been using Caddy internally, I wanted to expose it to the internet. I started out looking at static IP options, but they are expensive. One solution is to update DNS when the IP changes. I started out looking at having a standalone shell script to set DNS names, but Caddy supports dymanic DNS (and docs. I have been using tailscale to send traffic between the caddy reverse proxy and the actual service.

This featured figuring out GCP API calls from a GCE instance. I had to set up a service account to make this work.

I also had to patch caddy-dynamicdns to support a zone ending in a dot

psn.af..

Traffic again

More commute poking.

Luas

Firstly, I set up monitoring for the two LUAS stops I use, using The API. There is also data on lag which I have not used. I will wait to see how useful it is.

Secondly, I set up polling every 10 minutes for how fast Google thinks a commute will take, including if traffic is slow (yellow in Google maps) or stopped (red). Will see what emerges. Cost so far is 8 USD a day.

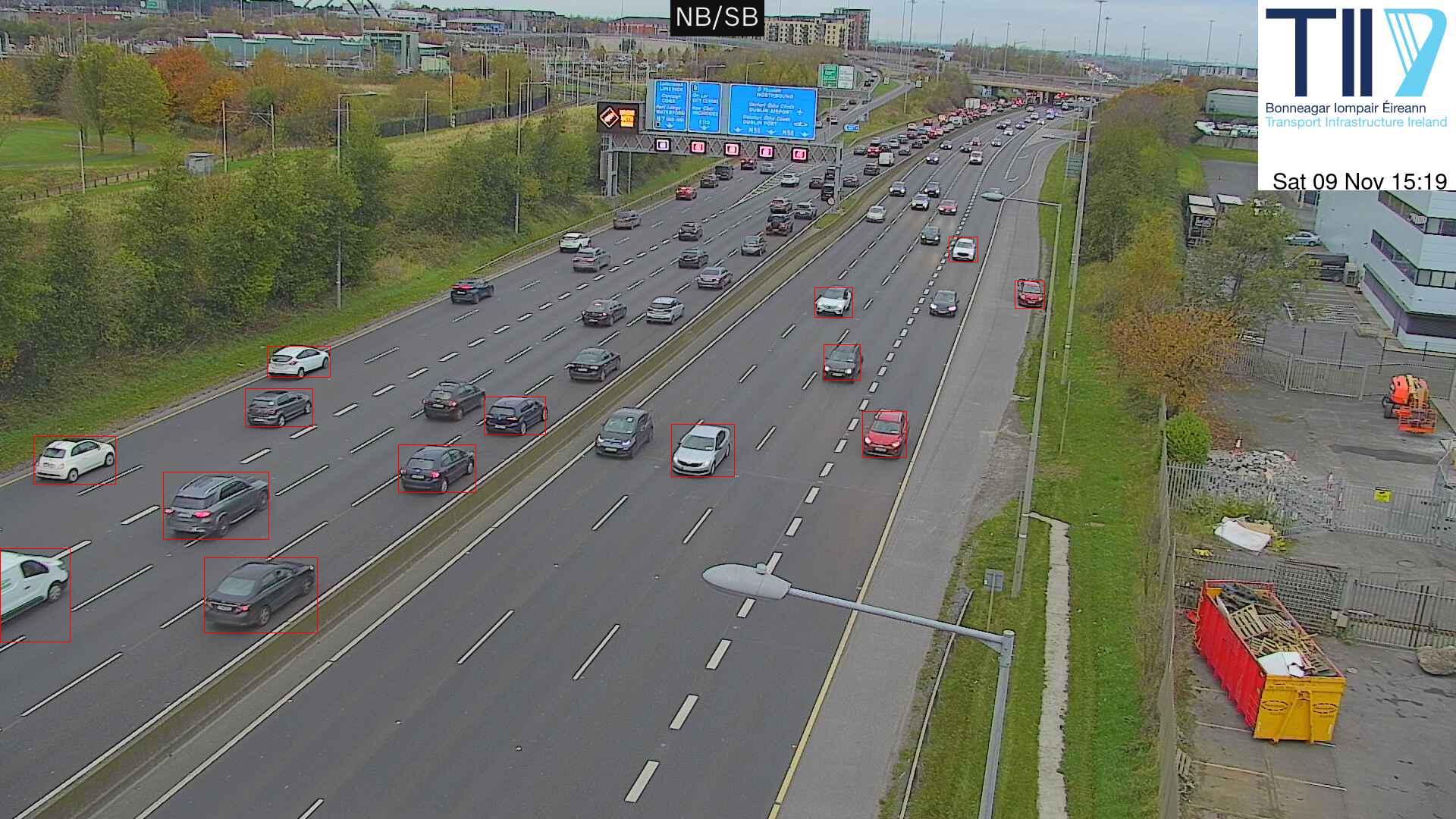

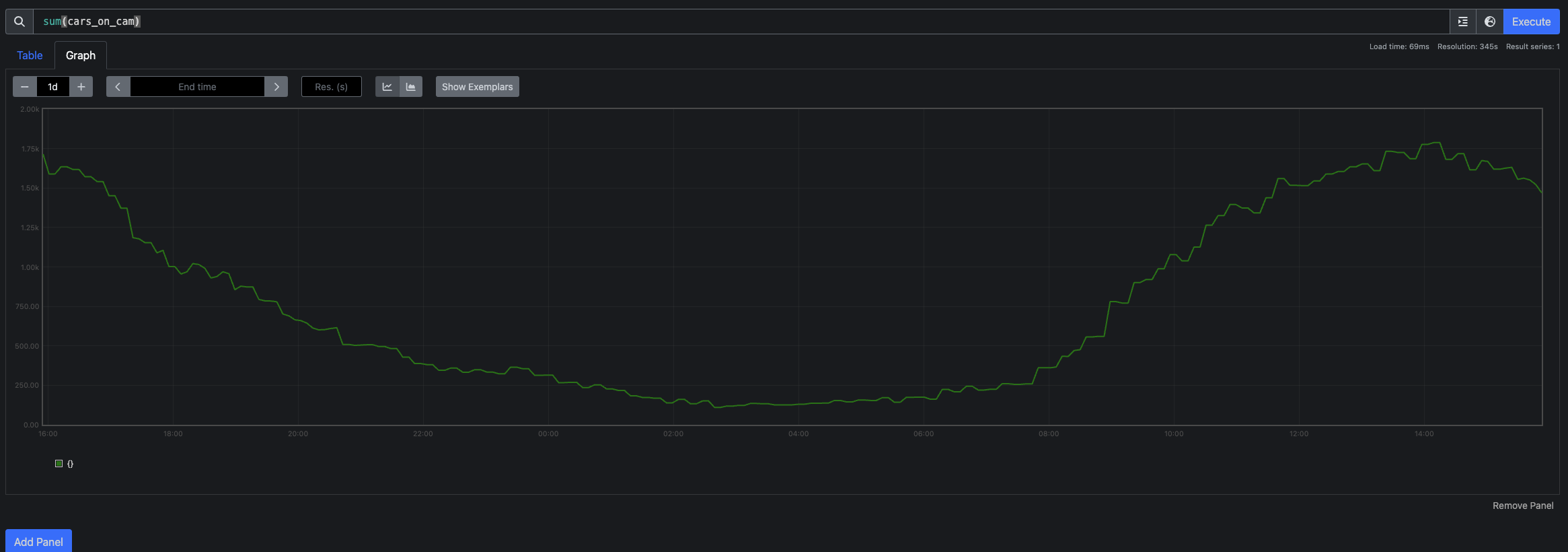

Traffic cams finished

I reached the end of poking traffic cams. I ended up with a system that:

- Polled all the cameras every 10m.

- Ran each camera through a image recognition model, counts the number of cars in the image.

- exports the count to Prometheus.

This blog is a very similar counting system.

How well does it work?

Here’s some traffic I got stuck in.

You can see that the bulk of the traffic is to the top middle of the picture, and isn’t counted.

Here’s another problem: traffic is all on one side of the road.

Also, the weather.

However, sometimes the results are artistic.

And a graph

Traffic cams again

I went back to poking traffic cameras. Since I last started looking TII has replaced their website. However, you can still get the camera feeds. I tried running an image through an image recognition model:

weather

I have a Pimoroni weather station that I have been poking at, on and off, for a year or so. The first setup was a Pi Zero in a stevenson screen powered by battery. This doesn’t work well when its cold.

The 2nd approach was to run a cat6 cable out the house, entrench it over the lawn, and into the shed near the sensor. I can then use the cat6 cable to deliver power (with PoE) and networking to a raspberry Pi, with the sensor plugged in.

I wrote a custom HTML graph library for a air quality meter a few years back, and a reused it for this project. It had a few issues:

- It did many many small writes to the Pi’s SD card, resulting in sadness. This was an attempt to keep history through restarts of the program.

- It kept all history in memory, resulting in an OOM.

I think I’ve fixed both of these and have set up Prometheus exporting as well. Hopefully we will get some weather data.

bluesky

Some notes from poking around at bluesky’s decentralisation.

instance

Running your own instance is trivial. There are docs, everything just works, etc. I was a little taken by the “run a new server so you can run a docker container”, but otherwise totally fine.

DNS

Bluesky requires you to point the A record for your domain to your bluesky

PDS. This would be problematic if you’re already using your domain for something

else, which I imagine is the common case. I’m surprised they didn’t use SRV

for this.

Migration

The account

migration

docs both have a warning, and tell you that you can’t migrate back to

bsky.social. This is surprising, since account portability is held up as one

advantage to bluesky in their paper and

protocol docs. I’ve

certainly seen the need for being able to move in fediverse.

Feed

Its really easy to see a live feed of posts with Jetstream. I wrote code to handle them all, was trivial. Bluesky is running at about 300 actions/s. A trivial go program I wrote can keep up with the feed. So, building anything might be doable.

traffic.

There’s a few different sources of traffic data.

Traffic data

traffic loops. There’s a nice website of loop locations. It is sadly difficult to just get all this data. However, you can explore one spot pretty closely. For example this is the M11 Between M50/M11 and Bray North. Going south, the PM peak is around 1500. This was surprising to me - I had thought that the peak would be around 1700-1800. Going north, peak is at 0700, but traffic doesn’t drop to normal until 1000. I had felt that waiting until 0900 was a good move, but I might as well set off at 08330.

Tom tom

tomtom has an API. However, it requires an account etc which I don’t have.

Google maps

Google maps turns out to have an API. This will tell you everything you see in the google maps app or website, including expected duration, routes, traffic on the route etc. It takes a future time, and includes the route, the expected duration, traffic on the route etc. You can use the polyline decoder to put the route on a map.

Google has billing for the API, but I hope to fall into the 200 dollars free setup.

I don’t know how good google maps is. The variation in timings is not large. However, it does show a few different routes and traffic jams.

traffic cams etc

There’s also Traffic.tii.ie which is a nice overlay on open street map. It has a few sources of information:

- Current roadworks and incidents

- Images from traffic cameras

- current weather, including more cameras

- current journey times

- what the overhead signs say

The cameras are interesting. Firstly all the camera images are loaded on page load, for both the weather and traffic cams. However the image is a 640x480 still. The weather cams come with current weather information. I suspect that the traffic cams are being downscaled, and there is support for ALPR.

Anyway, still poking around at all this stuff.

sorting out an old file server

I’ve been trying to sort out the contents of my old file server. Its been sitting powered off for a few years. I had dumping data onto it forever on the assumption that one day I would sort it out. Today’s the day!

Backups

Before starting I took a backup of everything to an external HDD with rsync -avz -P. This was

itself a slow and messy process, because the server locks up after a few hours

of copying. Many restarts later and a polling loop of

while true; do

if ! ssh -o ConnectTimeout=1 sewer true; then

echo 'server failed' | mail

fi

done

This was done. I then reinstalled the server, both due to it being 2 debian releases behind, and in the hope the lockups had been fixed.

Dupes

I wanted to find all identical files. First I wrote a program that used Go’s

filepath.Walk to list all files, and

to take a hash of each file. There’s filenames with spaces and other messiness,

so I put the results into a CSV file.

The first version of this program had poor performance, so I ended up writing a version with a worker pool to do the hashing. It is using 100% disk and 100% cpu, so that’s good. I think there’s still optimisations to make - for example I would like to only hash the first 1Mb of each file, then later hash the full file on finding a possible-dupe.

Given a CSV file, I wrote a program to read it and group by hash. This is suggesting 9% savings so far.

Next idea was to find whole directories that contained the same files. This I implemented by sorting the csv file, and processing each directory. I took a rolling hash of the file name (without the directory name) and the hash of the file contents. If two directories had the same files (names and contents, hashed in order), they are a match. This is again a 9% improvement, but likely not cumulative with file matches.

The next idea is to try and find whole sub-trees of directories that are the same. This is pretty promising. This is finding 24% savings.

License plate recognition

Previously I had been using platerecognizer.com for License plate recognition. Couple days back they sent a note saying I was at 95% of quota, followed by another saying I was at 100%. Quota is 2500 images, suggesting 250 images a day. That seems a lot. I did some things about this:

-

Firstly, I debugged why so many images. I turned on logging of the plate images and a web server to show me the images. It turned out that many were cars driving by. I added filtering for cars on the road to frigate, and the number of detections dropped.

-

Secondly, I added some Prometheus metrics to

frigate_plate_recognizer. -

Thirdly, I set up and started using Code Project AI for License plate recognition. The main problem with this was actually testing it with

curl. Command I ended up with is

curl -F upload=@Downloads/videoframe_1987228.png -X POST http://codeproject-ai.tail464ff.ts.net:32168/v1/vision/alpr

So far performance is OK.

cameras again

Third post on cameras. Last time I had

- frigate with feeds from two cameras.

- frigate sending messages to home assistant via MQTT

- Home assistant opening the gate.

This time I set up detection of cars for both cameras. I don’t want people opening them all the time. This featured writing a home assistant automation using MQTT directly, which is working fine. After some debugging, I had this working ok. It works surprisingly well.

Next, on the outer side, I set up frigate plate recognizer to detect if it was one of our plates on the car that showed up. If it is, open!

Debugging this featured driving in and out of the car several times. I’m sure this looked totally normal.

I also found a external HD to put all frigate’s data on. So far so good.

cameras

I have a few other cameras. We have a Neolink battery powered camera pointing at the stream. It turns out not to support RSTP, and instead to talk its own protocol. There’s a few blog posts on making these cameras work. I didn’t have much success. I’m going to try the homehub next.

I’ve also set up scrypted and frigate. I’m still playing around with these.

cameras again

Some more information about the cameras. I ended up running cat5e cable to the pump house and then onto the gate. I started out with a wireless bridge, but I wasn’t sure how well that would work long term. The cable install was not so bad, and it is mostly buried. I also took the chance to run an cable to near the picnic table and set up and outside AP there. There’s a lot of warnings about using wireless for camera feeds.

Once i had the cameras running, I poked around at NVR systems. I started out with scrypted but moved onto frigate. I have frigate running on the same NUC as everything else with a coral TPU for video processing, and so far that is working well. I like the frigate UI for reviewing changes, feeds etc. The motion detection is also really good, and surprisingly doesn’t trigger when the gate itself moves.

I’ve set up frigate behind tailscale. I also have a home assistant box also on tailscale. Home assistant provides a MQTT broker. I have set up home assistant to include frigate as an integration, and to open the gate when someone approaches from the inside. This is working pretty good so far, but still needs some work.

I did end up setting up SSH on HAOS, which is quite a process. On the way, docker ate my nixos machine so yey.

doorbell

We have just installed a Video doorbell on the gate, and I wrote a program to open it when someone says friend. Notes on this.

Doorbell

The doorbell is a Reolink PoE doorbell. It is an always on video camera, with audio. To install it I ended up drilling a hole through the gate (for the ethernet cable) and running it to the pump house. The pump house has power, so I set up a wifi bridge from the pump house to the main house. There’s also now a switch in the pump house. Camera feeds over wifi is generally discouraged, but so far this is OK.

Home bridge

I started out pairing the doorbell with Home bridge including setting up camera UI and ffmpeg for homebridge. This didn’t support the actual doorbell, so I started using https://www.scrypted.app/. Works great so far.

Speech

I initially looked at Open

WakeWord but it

has a limited set of trigger words.

speech_recognition is much more

interesting. It has N different providers that are ready to go. I found that the

Google Speech Recognition worked well enough not to set up the others. I also

tried out whisper but it was both slower

and less accurate than the Google API.

Getting a sample

Ideally, I would use streaming API. What I ended up doing was taking a short

recording (~few seconds) and then converting them to text. This gist was where I took the idea from.

T used ffmpeg to take a few seconds recording from the RSTP stream and convert

it into the right format.

Gate

I used aioremootio to

open the gate. The API

documentation

put me off writing my own client.

test camera

The cheapo PC420 IP camera I bought ages ago has stopped working (likely due to not having a firmware upgrade in 4 years) so blergh. I had hoped to use it for testing. In general, making linux sounds work was the most annoying part of this project.

Netboot

I’ve been playing around with netboot for raspberry pi. Goals are:

- easier reinstalls when stuff goes wrong.

- diskless nodes - there’s a lot of problems with SD card wearout, NVMe drives are expensive.

One problem is that a new PI5 will not netboot out the box, requiring a eeprom flash.

NFS

What I have working is booting from tftp and nfs. One doc one this. This works but it kinda messy. The client has read write access to the files on NFS, so you can;t share the filesystem over a few different clients.

Read Only NFS

I followed this guide to get read only NFS, works fine.

Nobodd

I’ve had a go with Nobodd. It hasn’t been very successful.

Future ideas

Debugging NTP

I spent a little time debugging NTP for the nodes of my k8s cluster. I have a local NTP server, and the k8s nodes were not using it, they were using the debian NTP pool. Debugging went:

- Is the DHCP server providing the NTP server address? Yes, visible in

/var/lib/dhcp/dhclient.leases. - Is

systemd-timesyncdusing this NTP server? No visible intimedatectl timesync-status -a.

I first found the dhcp exit hook /etc/dhcp/dhclient-exit-hooks.d/timesyncd

which makes a /run timesyncd config (but something cleans it up promptly. I

then found in the timesyncd logs the error

Timed out waiting for reply from 192.168.1.48:123 (192.168.1.48).

I then prove with tcpdump that packets were making it as far as the NTP server before timing out (ping, traceroute and curl all worked, suggesting not a network problem). I then worked out that the ntp server was not accepting requests from the k8s VLAN. One reload later, and we were working.

I wanted to fix all machines, so I ran

for n in node{2..6}; do ssh $n sudo dhclient -v eth0; done

for n in node{2..6}; do ssh $n timedatectl timesync-status; done

To rerun the dhcp hooks on each.

Useful commands

Show status of timesyncd.

timedatectl timesync-status -a

ntpdate has been reimplemented as ntpdig.

ntpdate -d 192.168.1.48

Logs of timesyncd

journalctl -u systemd-timesyncd --no-hostname --since "1 day ago"

Weather and k8s

I have a netatmo weather station, that has been providing data for the last year. I would like to build a little display that shows the current wind speed.

The display

I used a 7 inch touchscreen

display

for the display. I have ~/.config/lxsession/LXDE-pi/autostart as below.

@xset s off

@xset -dpms

@xset s noblank

@xrandr --output DSI-1 --rotate inverted

@pcmanfm --desktop --profile LXDE

@/usr/bin/chromium-browser --kiosk https://home-display.tail464ff.ts.net/ --enable-features=OverlayScrollbar

There’s lots of ideas and advice out here.

The API

Auth.

The hardest part of the API was auth. I implemented the three way oauth dance to get a token, and to refresh tokens before expiry. Once I had a token, the actual calls are simple enough. There’s only 1 point an hour.

K8s

This is this first thing I have built on the k8s cluster, so there was some yak shaving to get stuff working.

private docker image registry

I ended up running private docker registry for images. I find I kept building new images all the time. I set the registry up on docker itself.

I ended up with a script to build new images, using commands like below.

docker build -t registry.tail464ff.ts.net/home-display -t home-display -f Dockerfile .

docker push registry.tail464ff.ts.net/home-display

Secrets

This has a collection of secrets - the oauth client ID and client secret, and

then the token and refresh tokens. I put these in a k8s secret, using kubectl

to get started (docs). This is working pretty well so far.

K8s Cluster config

For the secrets, I ended up copying and pasting the code below to enable access to the secrets from within or outside the cluster.

tailscale

I’m still using the tailscale ingress. I discovered it needs a TLS server on the pod to actually work.

k8s

I’ve been trying to build a home k8s cluster. I started at microk8s, then moved on to the main stack.

hardware.

I started with two raspberry PIs. I used a NVMe base for each, and a PoE hat. The fan is pretty quiet, and I hope that the NVMe drives will have better lives.

cluster

I used kubeadm init to start the cluster, and flannel for networking. I spent

a long time debugging cgroups, see this

blog. I also had problems with dns - I

turned off tailscale dns.

tailscale

I have set up tailscale operator to provide HTTPS access to the dashboard.

TFTP server.

Some quick notes for myself. On a nixos machine, I used the package tftp-hpa. You need to enable it.

environment.systemPackages = [

pkgs.tftp-hpa

];

services.tftpd.enable = true;

Its running via xinetd, debug with

systemctl status xinetd.service

Ruuvi

I wrote a program to download all data from my Ruuvi tags, via the ruuvi cloud. There is an API. Couple quick points:

-

Most API calls need a Authorization bearer token from the user. To get a token, I logged in to station.ruuvi.com and poked around in dev tools until I found one.

-

The actual data is still in the encoded format used by the hardware tags. github.com/peterhellberg/ruuvitag will decode this data. I had to remove the first 5 bytes.

Naturecam

Its lovely weather, so I tried throwing together a pi zero naturecam. Will see if we get pictures.

SQLDB: now with parser

I finally filled in the original sin for my little sql engine: writing a parser for sql itself. This is a bit painful and and I would be tempted to rewrite. I used participle for the parser.

A dumb little SQL engine

A dumb little SQL engine.

I wrote a little SQL engine, based on ideas floating around head. Maybe one day I will write a bit more.

Features etc

- filters (

select,where) group by.

The big thing missing is a parser for SQL itself.

Key interface: iterable

type iterable interface {

next(context.Context) (*row, error)

}

This is used by each operation (select, group by, order by etc) to provide a new row of results to the calling operation. For example, where would call next on its source until it gets a row matching the where clause, then it would return that row to the caller.

A errStop is called on there being no more rows to return.

Key interface: expr

type expr interface {

value(r *row) (interface{}, typ, error)

}

This is used to evaluate different expressions in the context of a single row. Example expressios:

select a+b- herea+bis the expression.where a<3- herea<3is the expression.

Expressions are currently

- constants.

- variables extracted from the row.

- expressions defined as other expressions, for example:

a+bregexp_extract(foo, 'foobarbaz')

CSV

CSV is an iterable, using a CSF file as the source for the data. Its lame, but works.

feeds again

I went back to poking my little RSS reader. This time I

- Added support for running it as a server. This is one solution to the lack of cron woe.

- Added a docker file to serve it, set up Caddy and dns so it has a reasonable name.

- Capped the number of entries served.

Cron woe

I had hoped to use normal cron and static serving. Sadly, cron no longer works in NixOS. I tried out writing a user systemd config, but it quickly because easier and more fun to write a little server instead.

XZ

I was interested in the recent XZ security problem.

Other links

Find some data

Normally everything is in Github, but github has taken down the repository and disabled accounts. Perhaps wise, but not helpful for me.

- Debian

Salsa

- perhaps I am an idiot, but I could not work out how to get the upstream version tarball from this.

- Tukaani has the git

versions but not the release

tarballs.

- You need

git checkout v5.6.1.

- You need

- archive.org has the tarballs.

This is interesting, you’d imagine that the first thing github would do is stop distributing the bad tarball.

Peek at the files

I ended up writing a little python script for parsing the output of find . -type f | xargs sha1sum. ~200 files are missing from git, mostly translations

and docs. 35 files have different hashes.

There’s about 100 test files, over 500kb of stuff.

reading the start of the attack

I followed along when reading coldwind.pl.

build-to-host.m4

is missing from git but is where problems start. Note that other M4 files are

missing as well.

The grep on Line 86 matches the input.

% grep -aErls "#{4}[[:alnum:]]{5}#{4}$" .

./tests/files/bad-3-corrupt_lzma2.xz

And sure enough, expanding the M4 by hand gives

% sed "r\n" ./tests/files/bad-3-corrupt_lzma2.xz | tr "\t \-_" " \t_\-" | xz -d | sed "s/.*\.//g"

####Hello###

...

At this point its pretty clear you have a backdoor, but up to this point it is well hidden - unreadable shell and M4, compressed and corrupted payload, etc.

looking at the output

Lots of folks are looking at the binary blob. Note for future self:.

objdump -d 2 > 2.disass

honeypot

A honeypot.. The honeypot makes no sense, so I spent some time poking around the docker image.

Feeds

I missed having a simple RSS/atom reader, having used

rawdog many years ago. I didn’t quite find

what I was looking for, so I built a trivial replacement with

gofeed. All it does is grab N feeds and

put them into a simple html page.

I also looked at miniflux (suggestion) but it needs a database, which felt like overkill. goread is another option.

As always, the hard part was setup around the code. I ended up setting up Caddy

on nucnuc, and fighting systemd to serve from /home/psn. ProtectHome=no is

not enough, because the caddy user can’t stat /home. I ended up using

/srv/www/. I added an activationScript to grant the psn user access to

/srv/www.

I haven’t yet found a working solution for cron yet.

homebridge

I finally got around to setting up Homebridge. I started out trying to make it work as a docker container, but moved on just giving it its own raspberry pi (install instructions). Homebridge needs to be on the same network as the other homekit stuff, and it needs to control mDNS, so giving it its own machine worked out best.

I have set up the Awair plugin (works fine) and would like to set up the Automower plugin.

I have also set up Caddy as a https proxy in front of Homebridge. This gives me SSL etc. I needed to setup tailscale to accept requests from the caddy user.

I also ended up building my own caddy with xcaddy

and installing as per the docs.

Debugging Caddy:

journalctl -xeu caddy.service

self sniff

I have been wondering what sort of traffic my house sends to the internet. I fancied building a little sniffer.

Hardware

I used a NUC with three ethernet ports. One provides the normal connection, the other two are bridged together and plugged into the UDM and the starlink bridge adaptor. You can then sniff the bridge.

Nixos snippet.

networking.networkmanager.unmanaged = ["enp2s0" "enp3s0"];

networking.interfaces."enp2s0".useDHCP = false;

networking.interfaces."enp3s0".useDHCP = false;

networking.bridges = {

"br0" = {

interfaces = [ "enp2s0" "enp3s0" ];

};

};

Software

I wrote a program using gopacket. It

- sniffs traffic over the bridge

- aggregates by five tuple (TCP/UDP, source & dst IPs, source & dst ports)

- provides a little HTML page with stats.

Observations

- The starlinkmon I set up a few months ago is pretty chatty, the top talker when the network is idle.

- Lots more things than I expected use UDP.

Joining videos with FFmpeg

Problem: join a bunch of videos together.

Many people took videos of themselves saying happy birthday for my mother in law. I had the task to joining them together with ffmpeg.

This was harder than it looked because:

-

Some Videos (shot on iphone) used an HDR colour space. I ended up reshooting these in SDR.

-

Some videos were different sizes, so I used ffmpeg to add letterboxes for the right sizes.

-

Actually joining the videos results in quite a long ffmpeg command line.

I ended up writing a program to generate results, with the command line below.

ffmpeg -i 1.MOV -i 2.mp4 -i 3.mp4 -i 4.mp4 -i 5.mp4 -i 6.mp4 -i 7.mp4 -i 8.mp4 -i 9.mp4 -i 10.MOV -i 11.mp4 -i 12.mp4 -i 13.mp4 -i 14.mp4 -i 15.mp4 -i 16.mp4 -i 17.mp4 -i 18.mp4 -i 19.mp4 -i 20.mp4 -i 100.mp4 -filter_complex '[0:v:0]colormatrix=bt709:smpte170m,scale=480x640:force_original_aspect_ratio=decrease:in_range=full,pad=480:640:-1:-1:color=black[c0]; [1:v:0]colormatrix=bt709:smpte170m,scale=480x640:force_original_aspect_ratio=decrease:in_range=full,pad=480:640:-1:-1:color=black[c1]; [2:v:0]colormatrix=bt709:smpte170m,scale=480x640:force_original_aspect_ratio=decrease:in_range=full,pad=480:640:-1:-1:color=black[c2]; [3:v:0]colormatrix=bt709:smpte170m,scale=480x640:force_original_aspect_ratio=decrease:in_range=full,pad=480:640:-1:-1:color=black[c3]; [4:v:0]colormatrix=bt709:smpte170m,scale=480x640:force_original_aspect_ratio=decrease:in_range=full,pad=480:640:-1:-1:color=black[c4]; [5:v:0]colormatrix=bt709:smpte170m,scale=480x640:force_original_aspect_ratio=decrease:in_range=full,pad=480:640:-1:-1:color=black[c5]; [6:v:0]colormatrix=bt709:smpte170m,scale=480x640:force_original_aspect_ratio=decrease:in_range=full,pad=480:640:-1:-1:color=black[c6]; [7:v:0]colormatrix=bt709:smpte170m,scale=480x640:force_original_aspect_ratio=decrease:in_range=full,pad=480:640:-1:-1:color=black[c7]; [8:v:0]colormatrix=bt709:smpte170m,scale=480x640:force_original_aspect_ratio=decrease:in_range=full,pad=480:640:-1:-1:color=black[c8]; [9:v:0]colormatrix=bt709:smpte170m,scale=480x640:force_original_aspect_ratio=decrease:in_range=full,pad=480:640:-1:-1:color=black[c9]; [10:v:0]colormatrix=bt709:smpte170m,scale=480x640:force_original_aspect_ratio=decrease:in_range=full,pad=480:640:-1:-1:color=black[c10]; [11:v:0]colormatrix=bt709:smpte170m,scale=480x640:force_original_aspect_ratio=decrease:in_range=full,pad=480:640:-1:-1:color=black[c11]; [12:v:0]colormatrix=bt709:smpte170m,scale=480x640:force_original_aspect_ratio=decrease:in_range=full,pad=480:640:-1:-1:color=black[c12]; [13:v:0]colormatrix=bt709:smpte170m,scale=480x640:force_original_aspect_ratio=decrease:in_range=full,pad=480:640:-1:-1:color=black[c13]; [14:v:0]colormatrix=bt709:smpte170m,scale=480x640:force_original_aspect_ratio=decrease:in_range=full,pad=480:640:-1:-1:color=black[c14]; [15:v:0]colormatrix=bt709:smpte170m,scale=480x640:force_original_aspect_ratio=decrease:in_range=full,pad=480:640:-1:-1:color=black[c15]; [16:v:0]colormatrix=bt709:smpte170m,scale=480x640:force_original_aspect_ratio=decrease:in_range=full,pad=480:640:-1:-1:color=black[c16]; [17:v:0]colormatrix=bt709:smpte170m,scale=480x640:force_original_aspect_ratio=decrease:in_range=full,pad=480:640:-1:-1:color=black[c17]; [18:v:0]colormatrix=bt709:smpte170m,scale=480x640:force_original_aspect_ratio=decrease:in_range=full,pad=480:640:-1:-1:color=black[c18]; [19:v:0]colormatrix=bt709:smpte170m,scale=480x640:force_original_aspect_ratio=decrease:in_range=full,pad=480:640:-1:-1:color=black[c19]; [20:v:0]colormatrix=bt709:smpte170m,scale=480x640:force_original_aspect_ratio=decrease:in_range=full,pad=480:640:-1:-1:color=black[c20]; [c0] [0:a:0] [c1] [1:a:0] [c2] [2:a:0] [c3] [3:a:0] [c4] [4:a:0] [c5] [5:a:0] [c6] [6:a:0] [c7] [7:a:0] [c8] [8:a:0] [c9] [9:a:0] [c10] [10:a:0] [c11] [11:a:0] [c12] [12:a:0] [c13] [13:a:0] [c14] [14:a:0] [c15] [15:a:0] [c16] [16:a:0] [c17] [17:a:0] [c18] [18:a:0] [c19] [19:a:0] [c20] [20:a:0] concat=n=21:v=1:a=1[outv][outa]' -map '[outv]' -map '[outa]' -vsync 2 -pix_fmt yuv420p -c:v libx264 -y ../output2.mp4

billon row challenge

Problem: sort a billion rows

https://github.com/gunnarmorling/1brc

Generate a billion rows

The code does this by:

- Using the wikipedia page

- using

wikitable2csvfor making CSV files.

- using

- Consuming the five CSV files with a SQL statement.

- Interestingly, there’s 5 files mentioned in the comment but 6 continents listed in the Wikipedia page.

- Listed: Asia, Africa, Oceania, Europe, North America

- Not listed: South america. Spot checking a few south American cities shows them missing.

- Interestingly, there’s 5 files mentioned in the comment but 6 continents listed in the Wikipedia page.

- Selecting a uniformly random station.

- Selecting a Gaussian distributed value from the mean for that station.

- Repeat until enough points.

The seeding of these random numbers is hard to figure out.

- one implementation seems to use 0.

- OpenJDK seems to use a combination of a setting, the network addresses, and the current time.

Golang reimplementation

generate.go is a reimplementation based on above. This takes

go run generate.go 247.27s user 9.32s system 98% cpu 4:19.81 total

And produces a 13G file.

Sorting a billion rows.

First attempt: simple.

process1.go is a simple implementation.

- open the file, read it with

encoding/csv. - have a map to a struct per city, accumulate into the struct.

- when processed every line, project the map into a slice, sort the slice.

- print the output.

This runs in

go run process1.go --cpuprofile=prof.cpu 141.79s user 4.70s system 101% cpu 2:24.75 total

141 seconds, of which only 4.7s is user time.

profile

% go tool pprof prof.cpu

File: process1

Type: cpu

Time: Mar 3, 2024 at 3:52pm (GMT)

Duration: 144.04s, Total samples = 147.39s (102.32%)

Entering interactive mode (type "help" for commands, "o" for options)

(pprof) top10

Showing nodes accounting for 144.84s, 98.27% of 147.39s total

Dropped 137 nodes (cum <= 0.74s)

Showing top 10 nodes out of 58

flat flat% sum% cum cum%

131.25s 89.05% 89.05% 131.25s 89.05% syscall.syscall

6.57s 4.46% 93.51% 6.57s 4.46% runtime.pthread_cond_wait

2.25s 1.53% 95.03% 2.25s 1.53% runtime.usleep

1.41s 0.96% 95.99% 1.41s 0.96% runtime.pthread_cond_timedwait_relative_np

1.25s 0.85% 96.84% 1.25s 0.85% runtime.pthread_kill

1.07s 0.73% 97.56% 1.07s 0.73% runtime.madvise

0.74s 0.5% 98.07% 0.74s 0.5% runtime.kevent

0.19s 0.13% 98.20% 1.52s 1.03% runtime.stealWork

0.08s 0.054% 98.25% 131.77s 89.40% encoding/csv.(*Reader).readRecord

0.03s 0.02% 98.27% 132.16s 89.67% main.main

Nearly all time goes in syscalls, likely read.

file benchmark

Next stop was to write file_benchmark.go, to see how slow the disk was. It can read the file (single threaded) in 4.5 seconds.

Attempt 2: faster parser.

Trying with a 1Mb buffer: 138.45s user 2.33s system 101% cpu 2:19.06 total

Trying with a 1Gb buffer: 146.99s user 3.13s system 98% cpu 2:32.72 total

% go tool pprof prof.cpu

File: process2

Type: cpu

Time: Mar 3, 2024 at 4:58pm (GMT)

Duration: 152.15s, Total samples = 124.89s (82.08%)

Entering interactive mode (type "help" for commands, "o" for options)

(pprof) top10

Showing nodes accounting for 105.42s, 84.41% of 124.89s total

Dropped 108 nodes (cum <= 0.62s)

Showing top 10 nodes out of 58

flat flat% sum% cum cum%

36.11s 28.91% 28.91% 93.80s 75.11% main.(*reader).read

25.67s 20.55% 49.47% 36.65s 29.35% runtime.mallocgc

11.45s 9.17% 58.64% 11.45s 9.17% runtime.pthread_cond_signal

6.90s 5.52% 64.16% 11.17s 8.94% runtime.mapaccess2_faststr

5.49s 4.40% 68.56% 5.94s 4.76% runtime.newArenaMayUnlock

4.70s 3.76% 72.32% 4.70s 3.76% strconv.readFloat

4.62s 3.70% 76.02% 4.62s 3.70% runtime.memclrNoHeapPointers

3.94s 3.15% 79.17% 3.94s 3.15% runtime.madvise

3.29s 2.63% 81.81% 3.29s 2.63% aeshashbody

3.25s 2.60% 84.41% 33.81s 27.07% runtime.growslice

Next idea was to share buffers. 100 seconds, but still lots of GC.

Attempt 3: many threads

Before running out of time, I had a go at using many go routines. It was faster, but buggy.

Thoughts

The challenge is really “how fast can you read CSV?” which is a little odd. Would be better to use a better format.

Pi hole

Pi hole into docker

I’ve run a pi hole for a while now, but it would be helpful to free up the Pi its running on. So, quick docker container later, it was running on the NUC.

I did make a mistake setting up tailscale, and ended up debugging it with /proc/*/net/tcp, which was new and interesting.

I would like to set up SSL for the web interface (so chrome stops complaining). I use tailscale for access, so I turned off the password to the web UI.

Caddy & SSL and redirects, oh my

The problem

I would like to serve a redirect from go.psn.af to the same hostname on tailscale. Ideally, I would like to serve it over SSL (now the default) and have the certs automatically renew. I also would like to avoid opening up port 80 or 443 for cert checks.

Solution

Initial setup:

-

I’m currently hosting DNS on Google cloud DNS which is pretty good as a DNS server.

-

Caddy is an easy web server that supports redirects. It also supprts automatically getting an SSL cert using the ACME protocol. However, by default it doesn’t support using GCP DNS for the DNS ownership checks.

Solution: use caddy-dns/googleclouddns

plugin. This requires rebuilding

caddy for docker. I initially tried the build from source

instructions,

before discovering Caddy’s docs on adding a plugin to an

image (under “Adding custom Caddy modules”.

Once I had built a custom docker image and repointed DNS, everything just worked.

FROM caddy:2.7.6-builder AS builder

RUN xcaddy build \

--with github.com/caddy-dns/googleclouddns

FROM caddy:2.7.6

COPY --from=builder /usr/bin/caddy /usr/bin/caddy

I did have to provide GCP creds, which I’m a bit nervous about. I would like to set up some docker creds store.

homelab blog

I wanted a dead simple blog to keep trace of stuff. I had been previously using a Google doc, but can do better. This is:

- Using jekyll for the actual blog.

- Using Google app engine for the hosting.

- This does come with SSL cert etc.

- Using GCP DNS for the DNS records.

I had thought github pages didn’t do custom domains, but looking closer they do. Maybe next time I will set that up. I tried to delay actually setting up the blog until I had some content, but I got that in January, so lets write something. I think this is my 3rd blog. whoops.

x230

I picked up a x230 running coreboot as a nixos laptop. This is the result of the nuc basically being used for all sorts of little services and not really being useful as a desktop. I also thought it would be fun to have a little linux laptop again.

The good points:

- Nixos is great - installed easily, split my existing configuration into a common template, all is well.

The bad:

- The keyboard came with the UK layout which still sucks. I will have to try replacing it.

- Gosh, the spec and hardware are bad. I rebuilt the kernel for latencytop, and it took hours.

- I was also surprised at the lack of HDMI and the thinkpad power plug.

- I recall having one of these as my main laptop ~10 years ago.

Blackbox exporter

Just a simple probe to make sure the internet is working. Done just so I felt I was doing something.

local speedtest

Problem: is wifi too slow?

Considering buying a bigger AP.

Solution

librespeed - run it in a little docker container, have caddy proxy, tailscale, etc. Works great.

Tailscale to GCP DNS

Problem:

I want useful dns names for the tailnet.

Solution:

Program that creates A and AAAA records in psn.af for some tailscale names. Needs a tailscale tag to sync. Uses the tailscale API and the GCP DNS api.

Router proxy

Problem: unifi has no ssl certs

There’s a few solutions to this online:

- Run acme on the router, get ssl certs, import

- Import ssl certs from tailscale (tried, didtn’t work)

With the UDM being at the other end of the house, I’m not worried by sniffing.

Solution:

Run a reverse proxy (in this case caddy) that serves SSL and proxies through to the router. Run a tailscale node next to caddy with the name “router”. Have caddy use tailscale’s SSL certs. Have Caddy redirect http traffic to the full ts.net name.

Now, when I go to http://router, I get a redirect to https, with a valid cert from tailscale. Traffic from the proxy to the UDM is also encrypted.

Future work

Can we use tailscale for auth for router login?

Starlinkmon

Starlink stats

I set up starlink exporter to provide some stats from starlink, was pretty easy, is looking good.

Network

I have the dish in bridge mode. Initially I read that the dish is on address 192.168.100.1, and all you need is a route to that address. That didn’t seem to work, resulting in me setting up sniffing to see some traffic from the app. Sniffing confirmed the address, and sure enough, it turns out that the dish does not respond to ping, but does respond to http to 9201. Was really easy to get the exporter and dashboard running.